Estimated Time: 15 Minutes

The Basics

In this Getting Started Guide, you'll learn how to automate document processing by uploading documents to Butler's REST API using Queues.

Uploading documents to the API is a simple two step workflow:

- Upload documents to begin an extraction job

- Get the extraction results for use in downstream workflows

We'll create a simple Python script to upload a document to Butler for processing and then print the extraction results.

Note This is a follow up guide to Extracting data from your documents so check that out if you haven't already!

Step 1: Pre-Requisites

Before getting started, you'll need to collect a few things:

API Key

If you haven't already, make sure to generate and copy your API Key down for use within the Python script. See here to learn how to do that.

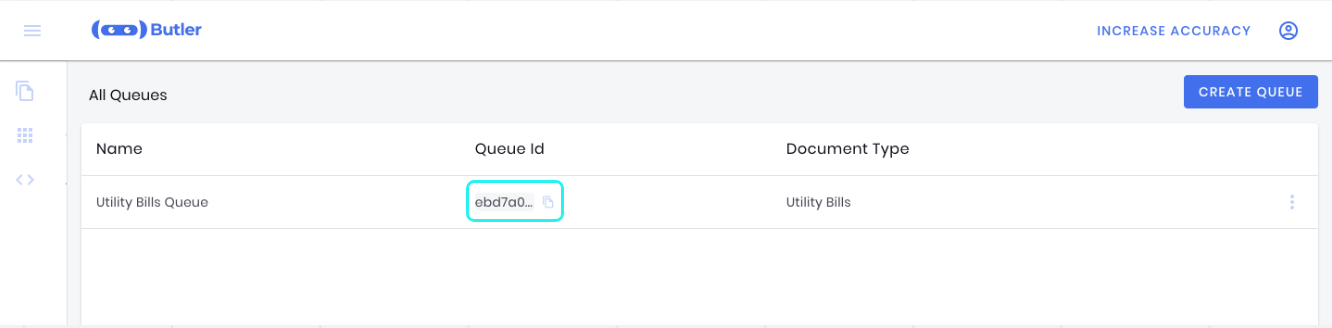

Queue Id

You'll need a copy of the API Id for the Queue you'd like to upload documents to for processing. To do this, go to the Queues page and copy the Id like this:

Step 2: Prepare environment

First, make sure to install all the necessary libraries for our script:

# We'll use requests for making all the necessary API calls

pip install requestsOnce done, create a new python script named process_docs.py and make sure to import all the necessary libraries, as well as define some helpful variables to use throughout:

# Import necessary python libraries

import os

import requests

import time

import mimetypes

# Specify variables for use in script below

api_base_url = 'https://app.butlerlabs.ai/api'

# Use the API key you grabbed in Step 1 to define headers for authorization

api_key = 'MY_API_KEY'

auth_headers = {

'Authorization': f'Bearer {api_key}'

}

# Use the Queue API Id you grabbed in Step 1

queue_id = 'MY_QUEUE_ID'

# Specify the path to the file you would like to process

file_location = 'PATH_TO_MY_FILE'Step 3: Upload document to API for processing

The first API call to make is to the /queue/{queueId}/uploads endpoint. This endpoint enables us to upload files to our Queue, which will start an asynchronous extraction job.

It returns an uploadId that we'll use to later retrieve the extraction results.

# Specify the API URL

upload_url = f'{api_base_url}/queues/{queue_id}/uploads'

# Prepare file for upload

file = open(file_location, 'rb')

mime_type = mimetypes.guess_type(file_location)[0]

files_to_upload = [

('files', (file_location, file, mime_type))

]

# Upload file to api

print(f'Uploading {file} to Butler for processing')

upload_json = requests.post(

upload_url,

headers=auth_headers,

files=files_to_upload

).json()

print(upload_json)

file.close()If done correctly, you should see a JSON response that looks something like this:

{

"uploadId": "dd47aead-3143-42ef-9423-42asa3675ed6",

"documents": [

{

"filename": "my_file.pdf",

"documentId": "f1ea4e64-b514-4b51-aba7-65545ba243a6"

}

]

}Notice the uploadId property on the response. This is the Id that we'll use to get the extracted results.

Step 4: Getting the extraction results

We'll use the /queue/{queueId}/extraction_results endpoint to retrieve the extraction results.

Processing a document can take a few seconds. In general, it may take around 30 seconds to process up to 5 pages of data (although often times it could be much faster).

You'll want to poll on this results endpoint until the processing has completed for each document. You can use the documentStatus property to understand the status of the extraction results for any single document.

extraction_results_url = f'{api_base_url}/queues/{queue_id}/extraction_results'

# Prepare query parameters

upload_id = upload_json['uploadId']

params = { 'uploadId': upload_id }

# Poll on extraction results until the extraction job has completed

# We'll set a placeholder for extraction_results

extraction_results = {'documentStatus': 'UploadingFile'}

while extraction_results['documentStatus'] != 'Completed':

results_json = requests.get(

extraction_results_url,

headers=auth_headers,

params=params

).json()

# items contains the list of extraction results for all documents you

# uploaded. For this guide, we'll assume you only uploaded a single doc

extraction_results = results_json['items'][0]

status = extraction_results['documentStatus']

if status != 'Completed':

print('Upload still processing. Sleeping for 10 seconds...')

time.sleep(10)

else:

print('Uploaded complete. Extraction results ready')You can see we use the documentStatus property to check the status of the document's extraction results.

If the extraction job completed successfully, you should see the following in your shell:

Upload still processing. Sleeping for 10 seconds...

Uploaded complete. Extraction results ready

Document StatusThere are a few different Status values for a document's extraction results. For more details on how to use this endpoint in a production setting, see here.

Once the extraction results are ready, lets print out the results!

# Print out the extraction results

file_name = extraction_results['fileName']

print(f'\nExtracted data from {file_name}:')

fields = extraction_results['formFields']

for field in fields:

field_name = field['fieldName']

extracted_value = field['value']

print(f'{field_name}: {extracted_value}'){

"items": [

{

"documentId": "c90418ca-038d-4839-8274-b468273cb230",

"documentStatus": "Completed",

"fileName": "utility_bill3.pdf",

"mimeType": "application/pdf",

"documentType": "Utility Bills",

"confidenceScore": "High",

"extractedFields": [

{

"fieldName": "Account Number",

"value": "1234 5678 900",

"confidenceScore": "High"

}

],

"tables": []

},

],

"hasNext": false,

"hasPrevious": false,

"totalCount": 1

}You'll notice that the response includes some metadata about the file as well as the extracted results in the extractedFields property. You can then use these extracted values in any downstream workflows you'd like.

Assuming your script ran successfully, you should see the following output in your shell:

Extracted data from utility_bill3.pdf:

Account Number: 1234 5678 900If you reached this point, congrats! You just processed your first document with Butler!

Where to next

You now have all the tools you need to add document processing into any product or workflow. We can’t wait to see what you’re going to build!

If you haven't already, make sure to check out the Getting Started Guide for Extracting data from your documents to learn how to create additional document types and train them to reach high accuracy.

If you're ready to build your production integration, check out the API Reference Section for more details on the specific endpoints.